packages

Contents

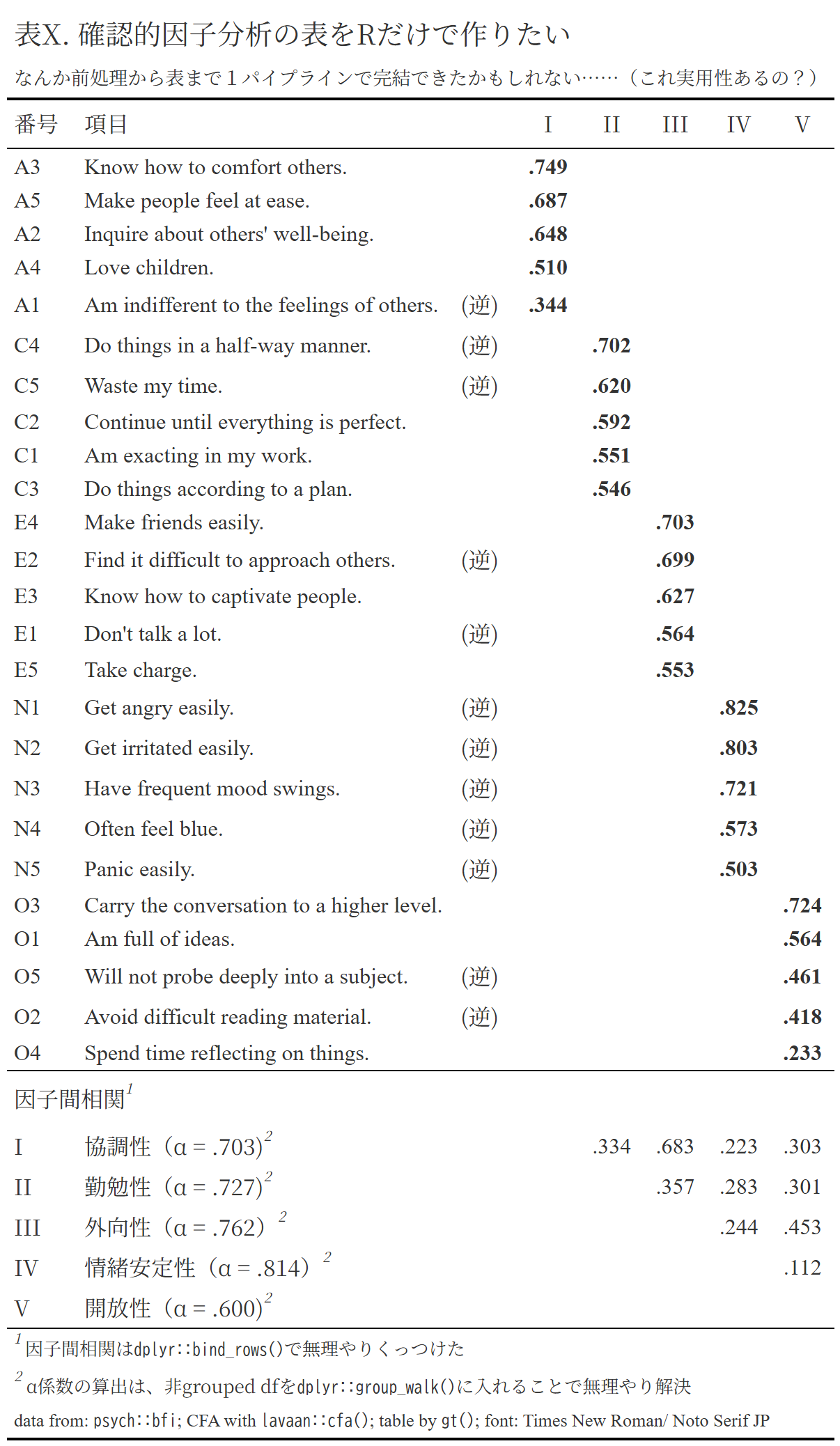

これのことです。

Rの備忘録

— Takuto SAKAI (@tsakai_psych) January 17, 2026

Rだけで確認的因子分析の表をつくってみた、1パイプラインで

なお、1パイプラインで完結させること実用性はほとんどない模様

技術的には可能ってやつ? pic.twitter.com/pg5Flkj25t

タイトルの通りです。1パイプラインで完結するために、かなり無理した書き方をしているところがあります。わざわざ1パイプラインで完結する必要はありません。

非常に長いので折りたたみました。見たい人は開いてみてください。

Code

bfi |>

relocate(

age, gender, education

) |>

mutate(

across(

.cols = bfi.dictionary |>

subset(

Keying == -1

) |>

rownames(),

.fns = \(x) {

7 - x

}

)

) |>

rownames_to_column(var = "id") |>

group_walk(

.f = \(x, idx) {

x |>

pivot_longer(

cols = matches("\\w\\d"),

names_to = "items"

) |>

mutate(

nest_item = str_extract(

items,

pattern = "^\\w"

)

) |>

nest(.by = nest_item) |>

mutate(

res = map(

.x = data,

.f = \(x) {

x |>

pivot_wider(

names_from = items,

values_from = value

) |>

select(matches("\\w\\d")) |>

psych::alpha() |>

_$total |>

_$raw_alpha |>

round(digits = 3) |>

sprintf("%.3f", x = _) |>

str_remove(pattern = "^0")

}

) |>

set_names(nest_item),

.by = nest_item

) |>

pull(res) |>

assign(

"res_alpha",

value = _,

envir = .GlobalEnv

)

}

) |>

cfa(

model = '

Agreeableness =~ A1 + A2 + A3 + A4 + A5

Conscientiousness =~ C1 + C2 + C3 + C4 + C5

Extraversion =~ E1 + E2 + E3 + E4 + E5

Neuroticism =~ N1 + N2 + N3 + N4 + N5

Opennness =~ O1 + O2 + O3 + O4 + O5

',

data = _,

std.lv = TRUE

) |>

assign(

"fit_bfi",

value = _

) |>

inspect(

what = "std.all"

) |>

_$lambda |>

unclass() |>

as_tibble(

rownames = "items"

) |>

arrange(

across(

.cols = c(Agreeableness, Conscientiousness, Extraversion, Neuroticism, Opennness),

.fns = ~desc(.x)

)

) |>

left_join(

y = bfi.dictionary |>

as_tibble(rownames = "items") |>

select(items, Item, Keying),

by = join_by("items")

) |>

relocate(

item_label = Item,

is_rev = Keying,

.after = items

) |>

mutate(

is_rev = if_else(

is_rev > 0,

"",

"rev"

)

) |>

bind_rows(

y = fit_bfi |>

inspect(what = "std.all") |>

_$psi |>

unclass() |>

(\(x) {

`[<-`(x, lower.tri(x, diag = TRUE), NA)

}) () |>

as_tibble(

rownames = "item_label"

) |>

mutate(

items = c("I", "II", "III", "IV", "V")

),

.id = "data_from"

) |>

group_by(data_from) |>

gt() |>

fmt_number(

decimals = 3

) |>

tab_style(

style = cell_text(

weight = "bold",

font = "Times New Roman"

),

locations = list(

cells_body(

columns = Agreeableness,

rows = 1:5

),

cells_body(

columns = Conscientiousness,

rows = 6:10

),

cells_body(

columns = Extraversion,

rows = 11:15

),

cells_body(

columns = Neuroticism,

rows = 16:20

),

cells_body(

columns = Opennness,

rows = 21:25

)

)

) |>

tab_stubhead(

label = "番号"

) |>

tab_header(

title = "表X. 確認的因子分析の表をRだけで作りたい",

subtitle = md("なんか前処理から表まで1パイプラインで完結できたかもしれない……(これ実用性あるの?)")

) |>

tab_source_note(

source_note = md(

"data from: `psych::bfi`; CFA with `lavaan::cfa()`; table by `gt()`; font: Times New Roman/ Noto Serif JP"

)

) |>

tab_footnote(

footnote = md("因子間相関は`dplyr::bind_rows()`で無理やりくっつけた"),

locations = cells_row_groups(

groups = 2

)

) |>

tab_footnote(

footnote = md("α係数の算出は、非grouped dfを`dplyr::group_walk()`に入れることで無理やり解決"),

locations = cells_body(

columns = item_label,

rows = 26:30

)

) |>

tab_style(

style = cell_text(

align = "left"

),

locations = cells_body(

columns = c(items, item_label)

)

) |>

tab_style(

style = cell_borders(

sides = "bottom",

style = "hidden"

),

locations = cells_row_groups()

) |>

tab_style(

style = cell_text(

font = "Times New Roman"

),

locations = list(

cells_body(

columns = c(items, item_label),

rows = 1:25

),

cells_body(

columns = Agreeableness:Opennness,

rows = 26:30

),

cells_source_notes()

)

) |>

tab_style(

style = cell_text(

align = "center"

),

locations = list(

cells_column_labels(columns = Agreeableness:Opennness),

cells_body(columns = Agreeableness:Opennness)

)

) |>

tab_style(

style = cell_text(

size = "small"

),

locations = list(

cells_footnotes(),

cells_source_notes()

)

) |>

sub_zero(

zero_text = ""

) |>

sub_missing(

missing_text = ""

) |>

text_transform(

fn = \(x) {

str_remove(

x,

pattern = "0(?=\\.)"

)

}

) |>

text_replace(

pattern = "rev",

replacement = "(逆)",

locations = cells_body(

columns = is_rev

)

) |>

text_case_match(

"1" ~ "",

"2" ~ "因子間相関",

.locations = cells_row_groups()

) |>

text_case_match(

"Agreeableness" ~ "I",

"Conscientiousness" ~ "II",

"Extraversion" ~ "III",

"Neuroticism" ~ "IV",

"Opennness" ~ "V",

.locations = cells_column_labels()

) |>

text_case_match(

"Agreeableness" ~ paste0(

"協調性(α = ", res_alpha$A, ")"

) |>

md(),

"Conscientiousness" ~ paste0(

"勤勉性(α = ", res_alpha$C, ")"

) |>

md(),

"Extraversion" ~ paste0(

"外向性(α = ", res_alpha$E, ")"

) |>

md(),

"Neuroticism" ~ paste0(

"情緒安定性(α = ", res_alpha$N, ")"

) |>

md(),

"Opennness" ~ paste0(

"開放性(α = ", res_alpha$O, ")"

) |>

md(),

.locations = cells_body(columns = item_label)

) |>

cols_label(

items = "番号",

item_label = "項目",

is_rev = ""

) |>

cols_width(

item_label ~ px(270)

) |>

tab_options(

table.font.names = "Noto Serif JP",

heading.border.bottom.color = "black",

heading.border.bottom.width = px(2),

table.border.top.style = "hidden",

table.border.bottom.width = px(2),

table.border.bottom.color = "black",

table_body.hlines.style = "hidden",

table_body.border.top.color = "black",

table_body.border.top.width = px(1),

table_body.border.bottom.color = "black",

table_body.border.bottom.width = px(1),

column_labels.border.bottom.color = "black",

column_labels.border.bottom.width = px(1),

row_group.border.top.color = "black",

row_group.border.top.width = px(1),

data_row.padding = px(3),

heading.align = "left",

table.margin.left = px(5),

table.margin.right = px(5)

)| 表X. 確認的因子分析の表をRだけで作りたい | |||||||

| なんか前処理から表まで1パイプラインで完結できたかもしれない……(これ実用性あるの?) | |||||||

| 番号 | 項目 | I | II | III | IV | V | |

|---|---|---|---|---|---|---|---|

| A3 | Know how to comfort others. | .749 | |||||

| A5 | Make people feel at ease. | .687 | |||||

| A2 | Inquire about others' well-being. | .648 | |||||

| A4 | Love children. | .510 | |||||

| A1 | Am indifferent to the feelings of others. | (逆) | .344 | ||||

| C4 | Do things in a half-way manner. | (逆) | .702 | ||||

| C5 | Waste my time. | (逆) | .620 | ||||

| C2 | Continue until everything is perfect. | .592 | |||||

| C1 | Am exacting in my work. | .551 | |||||

| C3 | Do things according to a plan. | .546 | |||||

| E4 | Make friends easily. | .703 | |||||

| E2 | Find it difficult to approach others. | (逆) | .699 | ||||

| E3 | Know how to captivate people. | .627 | |||||

| E1 | Don't talk a lot. | (逆) | .564 | ||||

| E5 | Take charge. | .553 | |||||

| N1 | Get angry easily. | (逆) | .825 | ||||

| N2 | Get irritated easily. | (逆) | .803 | ||||

| N3 | Have frequent mood swings. | (逆) | .721 | ||||

| N4 | Often feel blue. | (逆) | .573 | ||||

| N5 | Panic easily. | (逆) | .503 | ||||

| O3 | Carry the conversation to a higher level. | .724 | |||||

| O1 | Am full of ideas. | .564 | |||||

| O5 | Will not probe deeply into a subject. | (逆) | .461 | ||||

| O2 | Avoid difficult reading material. | (逆) | .418 | ||||

| O4 | Spend time reflecting on things. | .233 | |||||

| I | 協調性(α = .703)2 | .334 | .683 | .223 | .303 | ||

| II | 勤勉性(α = .727)2 | .357 | .283 | .301 | |||

| III | 外向性(α = .762)2 | .244 | .453 | ||||

| IV | 情緒安定性(α = .814)2 | .112 | |||||

| V | 開放性(α = .600)2 | ||||||

1 因子間相関はdplyr::bind_rows()で無理やりくっつけた |

|||||||

2 α係数の算出は、非grouped dfをdplyr::group_walk()に入れることで無理やり解決 |

|||||||

data from: psych::bfi; CFA with lavaan::cfa(); table by gt(); font: Times New Roman/ Noto Serif JP |

|||||||

【追記】2026-01-20 gt::gt()はそのままだとHTML出力になるんですが、環境によってはフォントとかが再現されないので、画像を追加しました。

Note処理の過程を見たい人向け(長いよ)

やってること

- 元データの前処理(逆転処理)

dplyr::group_walk()に非grouped dfを突っ込んで、中でα係数の計算をこなして.GrobalEnvに結果を保存しつつ、元のdfをそのまま返してもらうlavaan::cfa()で確認的因子分析をして、因子負荷量を抽出する- 後で使うので、CFAの結果を

assign()で.GrobalEnvに保存しつつ、そのまま処理を続ける

- 後で使うので、CFAの結果を

dplyr::left_join()で、psych::bfi.dictionaryから質問項目と逆転項目かどうかの列をくっつけるdplyr::bind_rows()で、因子間相関のdfを無理やりくっつける- CFAの結果はすでに保存してあるので、そこから抽出

gt::gt()で表づくり- 保存したα係数をくっつける

gt::gt()とViewPipeSteps::print_pipe_step()の相性が悪いので、gt()の前まで。

Code

options(pillar.print_max = 30) # for better tibble displaying

bfi %>%

relocate(

age, gender, education

) %>%

mutate(

across(

.cols = bfi.dictionary %>%

subset(

Keying == -1

) %>%

rownames(),

.fns = \(x) {

7 - x

}

)

) %>%

rownames_to_column(var = "id") %>%

group_walk(

.f = \(x, idx) {

x %>%

pivot_longer(

cols = matches("\\w\\d"),

names_to = "items"

) %>%

mutate(

nest_item = str_extract(

items,

pattern = "^\\w"

)

) %>%

nest(.by = nest_item) %>%

mutate(

res = map(

.x = data,

.f = \(x) {

x %>%

pivot_wider(

names_from = items,

values_from = value

) %>%

select(matches("\\w\\d")) %>%

psych::alpha() %>%

.$total %>%

.$raw_alpha %>%

round(digits = 3) %>%

sprintf("%.3f", x = .) %>%

str_remove(pattern = "^0")

}

) %>%

set_names(nest_item),

.by = nest_item

) %>%

pull(res) %>%

assign(

"res_alpha",

value = .,

envir = .GlobalEnv

)

}

) %>%

lavaan::cfa(

model = '

Agreeableness =~ A1 + A2 + A3 + A4 + A5

Conscientiousness =~ C1 + C2 + C3 + C4 + C5

Extraversion =~ E1 + E2 + E3 + E4 + E5

Neuroticism =~ N1 + N2 + N3 + N4 + N5

Opennness =~ O1 + O2 + O3 + O4 + O5

',

data = .,

std.lv = TRUE

) %>%

assign(

"fit_bfi",

value = .,

envir = .GlobalEnv

) %>%

inspect(

what = "std.all"

) %>%

.$lambda %>%

unclass() %>%

as_tibble(

rownames = "items"

) %>%

arrange(

across(

.cols = c(Agreeableness, Conscientiousness, Extraversion, Neuroticism, Opennness),

.fns = ~desc(.x)

)

) %>%

left_join(

y = bfi.dictionary %>%

as_tibble(rownames = "items") %>%

select(items, Item, Keying),

by = join_by("items")

) %>%

relocate(

item_label = Item,

is_rev = Keying,

.after = items

) %>%

mutate(

is_rev = if_else(

is_rev > 0,

"",

"rev"

)

) %>%

bind_rows(

y = fit_bfi %>%

inspect(what = "std.all") %>%

.$psi %>%

unclass() %>%

(\(x) {

`[<-`(x, lower.tri(x, diag = TRUE), NA)

}) () %>%

as_tibble(

rownames = "item_label"

) %>%

mutate(

items = c("I", "II", "III", "IV", "V")

),

.id = "data_from"

) %>%

group_by(data_from) %>%

ViewPipeSteps::print_pipe_steps()1. bfi# A tibble: 2,800 × 28

A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 E1 E2 E3 E4 E5 N1

<int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int>

1 2 4 3 4 4 2 3 3 4 4 3 3 3 4 4 3

2 2 4 5 2 5 5 4 4 3 4 1 1 6 4 3 3

3 5 4 5 4 4 4 5 4 2 5 2 4 4 4 5 4

4 4 4 6 5 5 4 4 3 5 5 5 3 4 4 4 2

5 2 3 3 4 5 4 4 5 3 2 2 2 5 4 5 2

6 6 6 5 6 5 6 6 6 1 3 2 1 6 5 6 3

7 2 5 5 3 5 5 4 4 2 3 4 3 4 5 5 1

8 4 3 1 5 1 3 2 4 2 4 3 6 4 2 1 6

9 4 3 6 3 3 6 6 3 4 5 5 3 NA 4 3 5

10 2 5 6 6 5 6 5 6 2 1 2 2 4 5 5 5

# ℹ 2,790 more rows

# ℹ 12 more variables: N2 <int>, N3 <int>, N4 <int>, N5 <int>, O1 <int>, O2 <int>, O3 <int>,

# O4 <int>, O5 <int>, gender <int>, education <int>, age <int>2. relocate(age, gender, education)# A tibble: 2,800 × 28

age gender education A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 E1 E2

<int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int> <int>

1 16 1 NA 2 4 3 4 4 2 3 3 4 4 3 3

2 18 2 NA 2 4 5 2 5 5 4 4 3 4 1 1

3 17 2 NA 5 4 5 4 4 4 5 4 2 5 2 4

4 17 2 NA 4 4 6 5 5 4 4 3 5 5 5 3

5 17 1 NA 2 3 3 4 5 4 4 5 3 2 2 2

6 21 2 3 6 6 5 6 5 6 6 6 1 3 2 1

7 18 1 NA 2 5 5 3 5 5 4 4 2 3 4 3

8 19 1 2 4 3 1 5 1 3 2 4 2 4 3 6

9 19 1 1 4 3 6 3 3 6 6 3 4 5 5 3

10 17 2 NA 2 5 6 6 5 6 5 6 2 1 2 2

# ℹ 2,790 more rows

# ℹ 13 more variables: E3 <int>, E4 <int>, E5 <int>, N1 <int>, N2 <int>, N3 <int>, N4 <int>,

# N5 <int>, O1 <int>, O2 <int>, O3 <int>, O4 <int>, O5 <int>3. mutate(across(.cols = bfi.dictionary %>% subset(Keying == -1) %>% rownames(), .fns = function(x) {

7 - x

}))# A tibble: 2,800 × 28

age gender education A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 E1 E2

<int> <int> <int> <dbl> <int> <int> <int> <int> <int> <int> <int> <dbl> <dbl> <dbl> <dbl>

1 16 1 NA 5 4 3 4 4 2 3 3 3 3 4 4

2 18 2 NA 5 4 5 2 5 5 4 4 4 3 6 6

3 17 2 NA 2 4 5 4 4 4 5 4 5 2 5 3

4 17 2 NA 3 4 6 5 5 4 4 3 2 2 2 4

5 17 1 NA 5 3 3 4 5 4 4 5 4 5 5 5

6 21 2 3 1 6 5 6 5 6 6 6 6 4 5 6

7 18 1 NA 5 5 5 3 5 5 4 4 5 4 3 4

8 19 1 2 3 3 1 5 1 3 2 4 5 3 4 1

9 19 1 1 3 3 6 3 3 6 6 3 3 2 2 4

10 17 2 NA 5 5 6 6 5 6 5 6 5 6 5 5

# ℹ 2,790 more rows

# ℹ 13 more variables: E3 <int>, E4 <int>, E5 <int>, N1 <dbl>, N2 <dbl>, N3 <dbl>, N4 <dbl>,

# N5 <dbl>, O1 <int>, O2 <dbl>, O3 <int>, O4 <int>, O5 <dbl>4. rownames_to_column(var = "id")# A tibble: 2,800 × 29

id age gender education A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 E1

<chr> <int> <int> <int> <dbl> <int> <int> <int> <int> <int> <int> <int> <dbl> <dbl> <dbl>

1 61617 16 1 NA 5 4 3 4 4 2 3 3 3 3 4

2 61618 18 2 NA 5 4 5 2 5 5 4 4 4 3 6

3 61620 17 2 NA 2 4 5 4 4 4 5 4 5 2 5

4 61621 17 2 NA 3 4 6 5 5 4 4 3 2 2 2

5 61622 17 1 NA 5 3 3 4 5 4 4 5 4 5 5

6 61623 21 2 3 1 6 5 6 5 6 6 6 6 4 5

7 61624 18 1 NA 5 5 5 3 5 5 4 4 5 4 3

8 61629 19 1 2 3 3 1 5 1 3 2 4 5 3 4

9 61630 19 1 1 3 3 6 3 3 6 6 3 3 2 2

10 61633 17 2 NA 5 5 6 6 5 6 5 6 5 6 5

# ℹ 2,790 more rows

# ℹ 14 more variables: E2 <dbl>, E3 <int>, E4 <int>, E5 <int>, N1 <dbl>, N2 <dbl>, N3 <dbl>,

# N4 <dbl>, N5 <dbl>, O1 <int>, O2 <dbl>, O3 <int>, O4 <int>, O5 <dbl>6. lavaan::cfa(model = "\n Agreeableness =~ A1 + A2 + A3 + A4 + A5\n Conscientiousness =~ C1 + C2 + C3 + C4 + C5\n Extraversion =~ E1 + E2 + E3 + E4 + E5\n Neuroticism =~ N1 + N2 + N3 + N4 + N5\n Opennness =~ O1 + O2 + O3 + O4 + O5\n ", data = ., std.lv = TRUE)lavaan 0.6-19 ended normally after 23 iterations

Estimator ML

Optimization method NLMINB

Number of model parameters 60

Used Total

Number of observations 2436 2800

Model Test User Model:

Test statistic 4165.467

Degrees of freedom 265

P-value (Chi-square) 0.0008. inspect(what = "std.all")$lambda

Agrbln Cnscnt Extrvr Nrtcsm Opnnns

A1 0.344 0.000 0.000 0.000 0.000

A2 0.648 0.000 0.000 0.000 0.000

A3 0.749 0.000 0.000 0.000 0.000

A4 0.510 0.000 0.000 0.000 0.000

A5 0.687 0.000 0.000 0.000 0.000

C1 0.000 0.551 0.000 0.000 0.000

C2 0.000 0.592 0.000 0.000 0.000

C3 0.000 0.546 0.000 0.000 0.000

C4 0.000 0.702 0.000 0.000 0.000

C5 0.000 0.620 0.000 0.000 0.000

E1 0.000 0.000 0.564 0.000 0.000

E2 0.000 0.000 0.699 0.000 0.000

E3 0.000 0.000 0.627 0.000 0.000

E4 0.000 0.000 0.703 0.000 0.000

E5 0.000 0.000 0.553 0.000 0.000

N1 0.000 0.000 0.000 0.825 0.000

N2 0.000 0.000 0.000 0.803 0.000

N3 0.000 0.000 0.000 0.721 0.000

N4 0.000 0.000 0.000 0.573 0.000

N5 0.000 0.000 0.000 0.503 0.000

O1 0.000 0.000 0.000 0.000 0.564

O2 0.000 0.000 0.000 0.000 0.418

O3 0.000 0.000 0.000 0.000 0.724

O4 0.000 0.000 0.000 0.000 0.233

O5 0.000 0.000 0.000 0.000 0.461

$theta

A1 A2 A3 A4 A5 C1 C2 C3 C4 C5 E1 E2 E3 E4 E5 N1

A1 0.882

A2 0.000 0.580

A3 0.000 0.000 0.438

A4 0.000 0.000 0.000 0.740

A5 0.000 0.000 0.000 0.000 0.528

C1 0.000 0.000 0.000 0.000 0.000 0.697

C2 0.000 0.000 0.000 0.000 0.000 0.000 0.650

C3 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.702

C4 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.507

C5 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.615

E1 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.682

E2 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.512

E3 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.607

E4 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.506

E5 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.694

N1 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.320

N2 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

N3 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

N4 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

N5 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

O1 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

O2 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

O3 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

O4 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

O5 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000

N2 N3 N4 N5 O1 O2 O3 O4 O5

A1

A2

A3

A4

A5

C1

C2

C3

C4

C5

E1

E2

E3

E4

E5

N1

N2 0.356

N3 0.000 0.481

N4 0.000 0.000 0.672

N5 0.000 0.000 0.000 0.747

O1 0.000 0.000 0.000 0.000 0.682

O2 0.000 0.000 0.000 0.000 0.000 0.826

O3 0.000 0.000 0.000 0.000 0.000 0.000 0.476

O4 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.946

O5 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.788

$psi

Agrbln Cnscnt Extrvr Nrtcsm Opnnns

Agreeableness 1.000

Conscientiousness 0.334 1.000

Extraversion 0.683 0.357 1.000

Neuroticism 0.223 0.283 0.244 1.000

Opennness 0.303 0.301 0.453 0.112 1.0009. .$lambda Agrbln Cnscnt Extrvr Nrtcsm Opnnns

A1 0.344 0.000 0.000 0.000 0.000

A2 0.648 0.000 0.000 0.000 0.000

A3 0.749 0.000 0.000 0.000 0.000

A4 0.510 0.000 0.000 0.000 0.000

A5 0.687 0.000 0.000 0.000 0.000

C1 0.000 0.551 0.000 0.000 0.000

C2 0.000 0.592 0.000 0.000 0.000

C3 0.000 0.546 0.000 0.000 0.000

C4 0.000 0.702 0.000 0.000 0.000

C5 0.000 0.620 0.000 0.000 0.000

E1 0.000 0.000 0.564 0.000 0.000

E2 0.000 0.000 0.699 0.000 0.000

E3 0.000 0.000 0.627 0.000 0.000

E4 0.000 0.000 0.703 0.000 0.000

E5 0.000 0.000 0.553 0.000 0.000

N1 0.000 0.000 0.000 0.825 0.000

N2 0.000 0.000 0.000 0.803 0.000

N3 0.000 0.000 0.000 0.721 0.000

N4 0.000 0.000 0.000 0.573 0.000

N5 0.000 0.000 0.000 0.503 0.000

O1 0.000 0.000 0.000 0.000 0.564

O2 0.000 0.000 0.000 0.000 0.418

O3 0.000 0.000 0.000 0.000 0.724

O4 0.000 0.000 0.000 0.000 0.233

O5 0.000 0.000 0.000 0.000 0.46110. unclass() Agreeableness Conscientiousness Extraversion Neuroticism Opennness

A1 0.3440969 0.0000000 0.0000000 0.0000000 0.0000000

A2 0.6480625 0.0000000 0.0000000 0.0000000 0.0000000

A3 0.7494317 0.0000000 0.0000000 0.0000000 0.0000000

A4 0.5099514 0.0000000 0.0000000 0.0000000 0.0000000

A5 0.6873613 0.0000000 0.0000000 0.0000000 0.0000000

C1 0.0000000 0.5507480 0.0000000 0.0000000 0.0000000

C2 0.0000000 0.5919409 0.0000000 0.0000000 0.0000000

C3 0.0000000 0.5459679 0.0000000 0.0000000 0.0000000

C4 0.0000000 0.7022903 0.0000000 0.0000000 0.0000000

C5 0.0000000 0.6202606 0.0000000 0.0000000 0.0000000

E1 0.0000000 0.0000000 0.5640632 0.0000000 0.0000000

E2 0.0000000 0.0000000 0.6988480 0.0000000 0.0000000

E3 0.0000000 0.0000000 0.6270618 0.0000000 0.0000000

E4 0.0000000 0.0000000 0.7031669 0.0000000 0.0000000

E5 0.0000000 0.0000000 0.5533894 0.0000000 0.0000000

N1 0.0000000 0.0000000 0.0000000 0.8249066 0.0000000

N2 0.0000000 0.0000000 0.0000000 0.8027075 0.0000000

N3 0.0000000 0.0000000 0.0000000 0.7205144 0.0000000

N4 0.0000000 0.0000000 0.0000000 0.5729313 0.0000000

N5 0.0000000 0.0000000 0.0000000 0.5027198 0.0000000

O1 0.0000000 0.0000000 0.0000000 0.0000000 0.5641192

O2 0.0000000 0.0000000 0.0000000 0.0000000 0.4175189

O3 0.0000000 0.0000000 0.0000000 0.0000000 0.7239194

O4 0.0000000 0.0000000 0.0000000 0.0000000 0.2325609

O5 0.0000000 0.0000000 0.0000000 0.0000000 0.460639411. as_tibble(rownames = "items")# A tibble: 25 × 6

items Agreeableness Conscientiousness Extraversion Neuroticism Opennness

<chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 A1 0.344 0 0 0 0

2 A2 0.648 0 0 0 0

3 A3 0.749 0 0 0 0

4 A4 0.510 0 0 0 0

5 A5 0.687 0 0 0 0

6 C1 0 0.551 0 0 0

7 C2 0 0.592 0 0 0

8 C3 0 0.546 0 0 0

9 C4 0 0.702 0 0 0

10 C5 0 0.620 0 0 0

11 E1 0 0 0.564 0 0

12 E2 0 0 0.699 0 0

13 E3 0 0 0.627 0 0

14 E4 0 0 0.703 0 0

15 E5 0 0 0.553 0 0

16 N1 0 0 0 0.825 0

17 N2 0 0 0 0.803 0

18 N3 0 0 0 0.721 0

19 N4 0 0 0 0.573 0

20 N5 0 0 0 0.503 0

21 O1 0 0 0 0 0.564

22 O2 0 0 0 0 0.418

23 O3 0 0 0 0 0.724

24 O4 0 0 0 0 0.233

25 O5 0 0 0 0 0.46112. arrange(across(.cols = c(Agreeableness, Conscientiousness, Extraversion, Neuroticism, Opennness), .fns = ~desc(.x)))# A tibble: 25 × 6

items Agreeableness Conscientiousness Extraversion Neuroticism Opennness

<chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 A3 0.749 0 0 0 0

2 A5 0.687 0 0 0 0

3 A2 0.648 0 0 0 0

4 A4 0.510 0 0 0 0

5 A1 0.344 0 0 0 0

6 C4 0 0.702 0 0 0

7 C5 0 0.620 0 0 0

8 C2 0 0.592 0 0 0

9 C1 0 0.551 0 0 0

10 C3 0 0.546 0 0 0

11 E4 0 0 0.703 0 0

12 E2 0 0 0.699 0 0

13 E3 0 0 0.627 0 0

14 E1 0 0 0.564 0 0

15 E5 0 0 0.553 0 0

16 N1 0 0 0 0.825 0

17 N2 0 0 0 0.803 0

18 N3 0 0 0 0.721 0

19 N4 0 0 0 0.573 0

20 N5 0 0 0 0.503 0

21 O3 0 0 0 0 0.724

22 O1 0 0 0 0 0.564

23 O5 0 0 0 0 0.461

24 O2 0 0 0 0 0.418

25 O4 0 0 0 0 0.23313. left_join(y = bfi.dictionary %>% as_tibble(rownames = "items") %>% select(items, Item, Keying), by = join_by("items"))# A tibble: 25 × 8

items Agreeableness Conscientiousness Extraversion Neuroticism Opennness Item Keying

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <fct> <int>

1 A3 0.749 0 0 0 0 Know how to comf… 1

2 A5 0.687 0 0 0 0 Make people feel… 1

3 A2 0.648 0 0 0 0 Inquire about ot… 1

4 A4 0.510 0 0 0 0 Love children. 1

5 A1 0.344 0 0 0 0 Am indifferent t… -1

6 C4 0 0.702 0 0 0 Do things in a h… -1

7 C5 0 0.620 0 0 0 Waste my time. -1

8 C2 0 0.592 0 0 0 Continue until e… 1

9 C1 0 0.551 0 0 0 Am exacting in m… 1

10 C3 0 0.546 0 0 0 Do things accord… 1

11 E4 0 0 0.703 0 0 Make friends eas… 1

12 E2 0 0 0.699 0 0 Find it difficul… -1

13 E3 0 0 0.627 0 0 Know how to capt… 1

14 E1 0 0 0.564 0 0 Don't talk a lot. -1

15 E5 0 0 0.553 0 0 Take charge. 1

16 N1 0 0 0 0.825 0 Get angry easily. -1

17 N2 0 0 0 0.803 0 Get irritated ea… -1

18 N3 0 0 0 0.721 0 Have frequent mo… -1

19 N4 0 0 0 0.573 0 Often feel blue. -1

20 N5 0 0 0 0.503 0 Panic easily. -1

21 O3 0 0 0 0 0.724 Carry the conver… 1

22 O1 0 0 0 0 0.564 Am full of ideas. 1

23 O5 0 0 0 0 0.461 Will not probe d… -1

24 O2 0 0 0 0 0.418 Avoid difficult … -1

25 O4 0 0 0 0 0.233 Spend time refle… 114. relocate(item_label = Item, is_rev = Keying, .after = items)# A tibble: 25 × 8

items item_label is_rev Agreeableness Conscientiousness Extraversion Neuroticism Opennness

<chr> <fct> <int> <dbl> <dbl> <dbl> <dbl> <dbl>

1 A3 Know how to comf… 1 0.749 0 0 0 0

2 A5 Make people feel… 1 0.687 0 0 0 0

3 A2 Inquire about ot… 1 0.648 0 0 0 0

4 A4 Love children. 1 0.510 0 0 0 0

5 A1 Am indifferent t… -1 0.344 0 0 0 0

6 C4 Do things in a h… -1 0 0.702 0 0 0

7 C5 Waste my time. -1 0 0.620 0 0 0

8 C2 Continue until e… 1 0 0.592 0 0 0

9 C1 Am exacting in m… 1 0 0.551 0 0 0

10 C3 Do things accord… 1 0 0.546 0 0 0

11 E4 Make friends eas… 1 0 0 0.703 0 0

12 E2 Find it difficul… -1 0 0 0.699 0 0

13 E3 Know how to capt… 1 0 0 0.627 0 0

14 E1 Don't talk a lot. -1 0 0 0.564 0 0

15 E5 Take charge. 1 0 0 0.553 0 0

16 N1 Get angry easily. -1 0 0 0 0.825 0

17 N2 Get irritated ea… -1 0 0 0 0.803 0

18 N3 Have frequent mo… -1 0 0 0 0.721 0

19 N4 Often feel blue. -1 0 0 0 0.573 0

20 N5 Panic easily. -1 0 0 0 0.503 0

21 O3 Carry the conver… 1 0 0 0 0 0.724

22 O1 Am full of ideas. 1 0 0 0 0 0.564

23 O5 Will not probe d… -1 0 0 0 0 0.461

24 O2 Avoid difficult … -1 0 0 0 0 0.418

25 O4 Spend time refle… 1 0 0 0 0 0.23315. mutate(is_rev = if_else(is_rev > 0, "", "rev"))# A tibble: 25 × 8

items item_label is_rev Agreeableness Conscientiousness Extraversion Neuroticism Opennness

<chr> <fct> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 A3 Know how to comf… "" 0.749 0 0 0 0

2 A5 Make people feel… "" 0.687 0 0 0 0

3 A2 Inquire about ot… "" 0.648 0 0 0 0

4 A4 Love children. "" 0.510 0 0 0 0

5 A1 Am indifferent t… "rev" 0.344 0 0 0 0

6 C4 Do things in a h… "rev" 0 0.702 0 0 0

7 C5 Waste my time. "rev" 0 0.620 0 0 0

8 C2 Continue until e… "" 0 0.592 0 0 0

9 C1 Am exacting in m… "" 0 0.551 0 0 0

10 C3 Do things accord… "" 0 0.546 0 0 0

11 E4 Make friends eas… "" 0 0 0.703 0 0

12 E2 Find it difficul… "rev" 0 0 0.699 0 0

13 E3 Know how to capt… "" 0 0 0.627 0 0

14 E1 Don't talk a lot. "rev" 0 0 0.564 0 0

15 E5 Take charge. "" 0 0 0.553 0 0

16 N1 Get angry easily. "rev" 0 0 0 0.825 0

17 N2 Get irritated ea… "rev" 0 0 0 0.803 0

18 N3 Have frequent mo… "rev" 0 0 0 0.721 0

19 N4 Often feel blue. "rev" 0 0 0 0.573 0

20 N5 Panic easily. "rev" 0 0 0 0.503 0

21 O3 Carry the conver… "" 0 0 0 0 0.724

22 O1 Am full of ideas. "" 0 0 0 0 0.564

23 O5 Will not probe d… "rev" 0 0 0 0 0.461

24 O2 Avoid difficult … "rev" 0 0 0 0 0.418

25 O4 Spend time refle… "" 0 0 0 0 0.23316. bind_rows(y = fit_bfi %>% inspect(what = "std.all") %>% .$psi %>% unclass() %>% (function(x) {

`[<-`(x, lower.tri(x, diag = TRUE), NA)

})() %>% as_tibble(rownames = "item_label") %>% mutate(items = c("I", "II", "III", "IV", "V")), .id = "data_from")# A tibble: 30 × 9

data_from items item_label is_rev Agreeableness Conscientiousness Extraversion Neuroticism

<chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

1 1 A3 Know how to comf… "" 0.749 0 0 0

2 1 A5 Make people feel… "" 0.687 0 0 0

3 1 A2 Inquire about ot… "" 0.648 0 0 0

4 1 A4 Love children. "" 0.510 0 0 0

5 1 A1 Am indifferent t… "rev" 0.344 0 0 0

6 1 C4 Do things in a h… "rev" 0 0.702 0 0

7 1 C5 Waste my time. "rev" 0 0.620 0 0

8 1 C2 Continue until e… "" 0 0.592 0 0

9 1 C1 Am exacting in m… "" 0 0.551 0 0

10 1 C3 Do things accord… "" 0 0.546 0 0

11 1 E4 Make friends eas… "" 0 0 0.703 0

12 1 E2 Find it difficul… "rev" 0 0 0.699 0

13 1 E3 Know how to capt… "" 0 0 0.627 0

14 1 E1 Don't talk a lot. "rev" 0 0 0.564 0

15 1 E5 Take charge. "" 0 0 0.553 0

16 1 N1 Get angry easily. "rev" 0 0 0 0.825

17 1 N2 Get irritated ea… "rev" 0 0 0 0.803

18 1 N3 Have frequent mo… "rev" 0 0 0 0.721

19 1 N4 Often feel blue. "rev" 0 0 0 0.573

20 1 N5 Panic easily. "rev" 0 0 0 0.503

21 1 O3 Carry the conver… "" 0 0 0 0

22 1 O1 Am full of ideas. "" 0 0 0 0

23 1 O5 Will not probe d… "rev" 0 0 0 0

24 1 O2 Avoid difficult … "rev" 0 0 0 0

25 1 O4 Spend time refle… "" 0 0 0 0

26 2 I Agreeableness <NA> NA 0.334 0.683 0.223

27 2 II Conscientiousness <NA> NA NA 0.357 0.283

28 2 III Extraversion <NA> NA NA NA 0.244

29 2 IV Neuroticism <NA> NA NA NA NA

30 2 V Opennness <NA> NA NA NA NA

# ℹ 1 more variable: Opennness <dbl># A tibble: 30 × 9

# Groups: data_from [2]

data_from items item_label is_rev Agreeableness Conscientiousness Extraversion Neuroticism

<chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

1 1 A3 Know how to comf… "" 0.749 0 0 0

2 1 A5 Make people feel… "" 0.687 0 0 0

3 1 A2 Inquire about ot… "" 0.648 0 0 0

4 1 A4 Love children. "" 0.510 0 0 0

5 1 A1 Am indifferent t… "rev" 0.344 0 0 0

6 1 C4 Do things in a h… "rev" 0 0.702 0 0

7 1 C5 Waste my time. "rev" 0 0.620 0 0

8 1 C2 Continue until e… "" 0 0.592 0 0

9 1 C1 Am exacting in m… "" 0 0.551 0 0

10 1 C3 Do things accord… "" 0 0.546 0 0

11 1 E4 Make friends eas… "" 0 0 0.703 0

12 1 E2 Find it difficul… "rev" 0 0 0.699 0

13 1 E3 Know how to capt… "" 0 0 0.627 0

14 1 E1 Don't talk a lot. "rev" 0 0 0.564 0

15 1 E5 Take charge. "" 0 0 0.553 0

16 1 N1 Get angry easily. "rev" 0 0 0 0.825

17 1 N2 Get irritated ea… "rev" 0 0 0 0.803

18 1 N3 Have frequent mo… "rev" 0 0 0 0.721

19 1 N4 Often feel blue. "rev" 0 0 0 0.573

20 1 N5 Panic easily. "rev" 0 0 0 0.503

21 1 O3 Carry the conver… "" 0 0 0 0

22 1 O1 Am full of ideas. "" 0 0 0 0

23 1 O5 Will not probe d… "rev" 0 0 0 0

24 1 O2 Avoid difficult … "rev" 0 0 0 0

25 1 O4 Spend time refle… "" 0 0 0 0

26 2 I Agreeableness <NA> NA 0.334 0.683 0.223

27 2 II Conscientiousness <NA> NA NA 0.357 0.283

28 2 III Extraversion <NA> NA NA NA 0.244

29 2 IV Neuroticism <NA> NA NA NA NA

30 2 V Opennness <NA> NA NA NA NA

# ℹ 1 more variable: Opennness <dbl>Conclusion

データとコードブックがあれば技術的には可能です。 そのうちちゃんとした因子分析のやり方のメモを残そうと思います。

Session Infomation

Notesessioninfo

R version 4.4.2 (2024-10-31 ucrt)

Platform: x86_64-w64-mingw32/x64

Running under: Windows 11 x64 (build 26100)

Matrix products: default

locale:

[1] LC_COLLATE=Japanese_Japan.utf8 LC_CTYPE=Japanese_Japan.utf8 LC_MONETARY=Japanese_Japan.utf8

[4] LC_NUMERIC=C LC_TIME=Japanese_Japan.utf8

time zone: Asia/Tokyo

tzcode source: internal

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] gt_1.2.0 psych_2.5.6 lavaan_0.6-19 lubridate_1.9.4 forcats_1.0.0 stringr_1.5.1

[7] dplyr_1.1.4 purrr_1.1.0 readr_2.1.5 tidyr_1.3.1 tibble_3.3.0 ggplot2_3.5.2

[13] tidyverse_2.0.0

loaded via a namespace (and not attached):

[1] utf8_1.2.6 sass_0.4.10 generics_0.1.4 xml2_1.4.0

[5] stringi_1.8.7 lattice_0.22-7 hms_1.1.3 digest_0.6.37

[9] magrittr_2.0.3 evaluate_1.0.5 grid_4.4.2 timechange_0.3.0

[13] RColorBrewer_1.1-3 fastmap_1.2.0 jsonlite_2.0.0 scales_1.4.0

[17] ViewPipeSteps_0.1.0 pbivnorm_0.6.0 textshaping_1.0.1 mnormt_2.1.1

[21] cli_3.6.5 rlang_1.1.6 litedown_0.7 commonmark_2.0.0

[25] base64enc_0.1-3 withr_3.0.2 yaml_2.3.10 tools_4.4.2

[29] parallel_4.4.2 tzdb_0.5.0 pacman_0.5.1 vctrs_0.6.5

[33] R6_2.6.1 stats4_4.4.2 lifecycle_1.0.4 fs_1.6.6

[37] htmlwidgets_1.6.4 ragg_1.4.0 pkgconfig_2.0.3 pillar_1.11.0

[41] gtable_0.3.6 glue_1.8.0 systemfonts_1.2.3 xfun_0.52

[45] tidyselect_1.2.1 rstudioapi_0.17.1 knitr_1.50 farver_2.1.2

[49] htmltools_0.5.8.1 nlme_3.1-168 rmarkdown_2.29 labeling_0.4.3

[53] compiler_4.4.2 quadprog_1.5-8 markdown_2.0 Log

- 2026-01-20

- HTML出力だと環境によってはフォントが再現されないので、画像を追加。